Conference Publications

2025

-

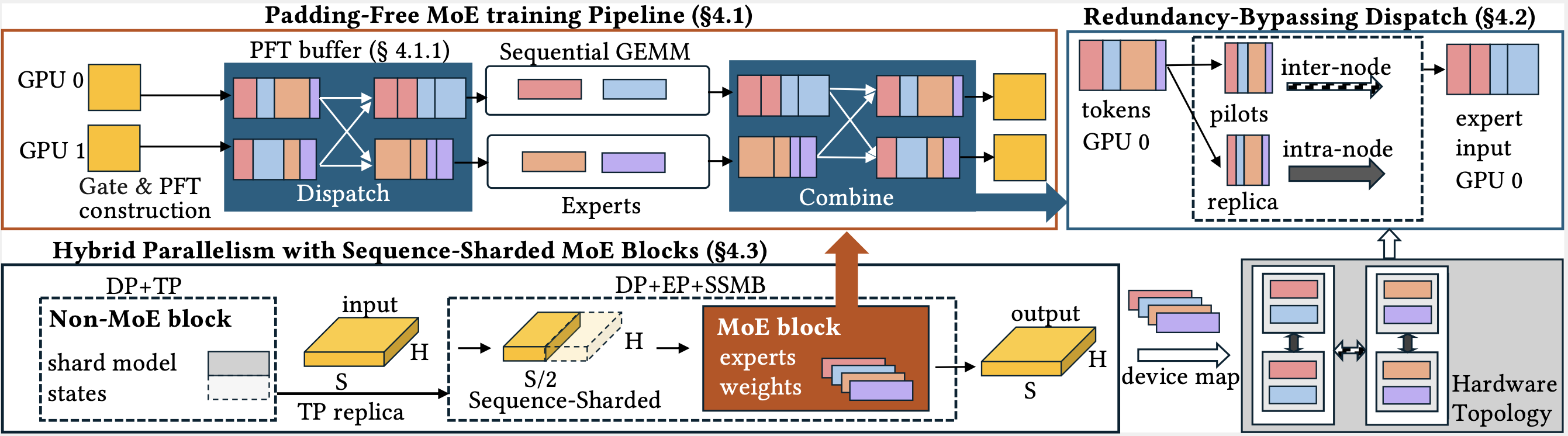

X-MoE: Enabling Scalable Training for Emerging Mixture-of-Experts Architectures on HPC Platforms. Yueming Yuan, Ahan Gupta, Jianping Li, Sajal Dash, Feiyi Wang, Minjia Zhang.

SC ‘2025 (Accepatance: 21.5%).

Best Student Paper

-

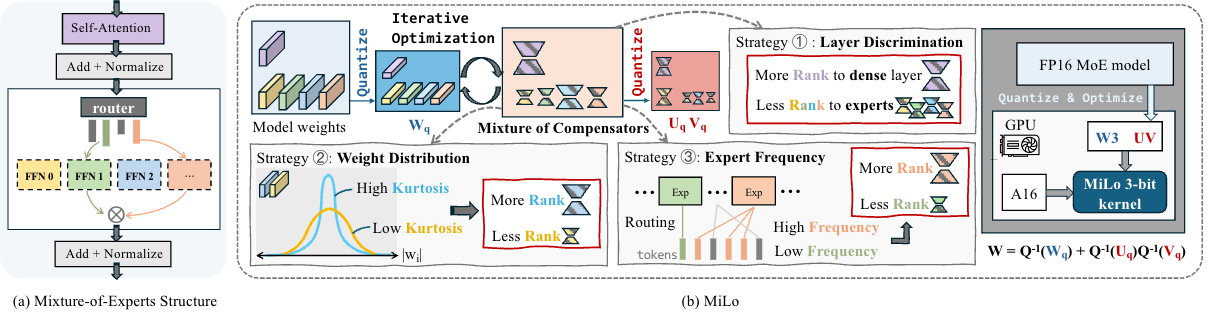

MiLo: Efficient Quantized MoE Inference with Mixture of Low-Rank Compensators. [pdf]

Beichen Huang*, Yueming Yuan*, Zelei Shao*, Minjia Zhang (*Equal contribution).

MLSys ‘2025 (Accepatance: 22%).

-

SPLAT: A framework for optimised GPU code-generation for SParse reguLar ATtention. [pdf]

Ahan Gupta, Yueming Yuan, Devansh Jain, Yuhao Ge, David Aponte, Yanqi Zhou, Charith Mendis.

OOPSLA ‘2025.